Monday's Musings: Why Next Gen Apps Must Improve Existing Activity Streams

Upcoming Data Deluge Threatens The Effectiveness Of Activity Streams

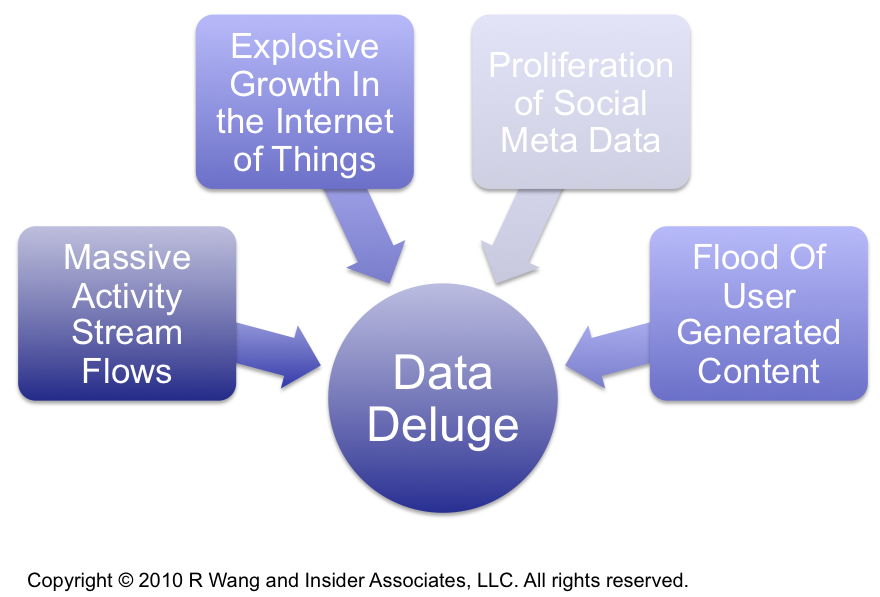

Activity streams, best popularized by consumer apps such as Facebook and Twitter, have emerged as the Web 2.0 visualization paradigm that addresses the massive flows of information users face (see Figure 1). As a key element of the dynamic user experiences discussed in the 10 elements of social enterprise apps, activity streams epitomize how apps can deliver contextual and relevant information. Unfortunately, what was seen as an elegant solution that brought people, data, applications, and information flow into a centralized real-time interface, now faces assault from the exponential growth in data and information sources. In fact, most people can barely keep up with the information overload, let alone face the four forces of data deluge that will likely paralyze both collaboration and decision making (see Figure 2):

- Massive activity stream aggregation by enterprise apps. Every enterprise app seeking sexy social-ness plans one or more social networking feeds into their next release. The mixing and mashing of personal and work related feeds will leave users confused about context and lower existing signal to noise ratios. Yet, proliferation will continue as users seek to bring aggregated sources of information into one centralized feed.

- Explosive growth in the Internet of Things (IOT). Beyond just device to device communications, the web of objects, appliances, and living creatures through wired and wireless sensors, chips, and tags will drive most of the growth in the internet in the next 5 to 10 years. With an estimated 100 billion net-enabled devices by 2020, these networks seek to discover activity patterns, predict outcomes, and monitor operational health. The massive amounts of sensing data driven into systems will not only overwhelm users, but also handicap the performance of today's data warehouses, analytics platforms, and applications.

- Flood of user generated content (UGC). User generated content continues to grow. Facebook has over 500 million users populating pages with rich social meta data. There are over 300 million blogs. Wikipedia has more than 15 million articles. Content sources will propagate at geometric rates, especially as BRIC (Brazil, Russia, India, and China) countries up their adoption.

- Proliferation of social meta data. Organizations seeking a marketing edge must digest, interpret, and asses large volumes of meta data from sources such as Facebook Open Graph. Successful identification of social graphs require matching gargantuan volumes of meta data (e.g. likes, check-ins, groups, etc) through introspection across a vast array of objects. Human centric and object centric events will inevitably coexist and engulf unified activity streams.

Figure 1. Activity Streams Improve Collaboration And Deliver Dynamic User Experiences

Figure 2. The Four Forces Of Data Deluge

Filters Improve The Signal To Noise Ratios And Drive Relevance

Given the tall task of repairing the relevance of activity streams under the four forces of data deluge, users need better filtering tools from their existing solutions. Today's rudimentary filters remind users of the simple search engines from the early 1990's. Users must have filters with the sophistication to cut across the big data challenges. Filters must span across mediums such as pages, books, notes, photos, videos, voice, and others. Based on 23 user scenarios, the 5 major categories of filters should include:

- People. Requests focus around people, their relationships, and formal and informal groupings.

- Location. Physical location attributes include spatial coordinates, topology, environmental conditions, vertical position, and others.

- Time and date. Time and date plays a key role in parsing out historical data, multiple chronological perspectives, and forecasting and simulation.

- Events. Events serve as a mega filter by relating people, location, time and date, and purpose.

- Topics. Topics represent a broader filter that represents a generic "other" category in filtering.

The Bottom Line: Users Need Greater Control Over Their Point Of View And Next Gen Apps Must Deliver

Filters alone will not provide enough firepower to put users back in control. Users must easily self-manage filters. Self-learning patterns should be identified by the system. Text analytics, natural language processing, and complex sentiment algorithms will play a role. User driven advanced filters should at a minimum include:

- Saved filters. Users save and share with other users their library of filters.

- Trending. Users apply layers of filters to correlate complex multi-dimensional patterns.

- Simulations. Users proactively test out scenario plans with existing data.

- Predictions. Users apply pattern recognition and trending to test hypotheses.

Your POV.

Buyers, do you need help understanding how activity streams can improve adoption and ROI. Are you suffering from data deluge? Sellers and vendors, want to test out your next generation product ideas? You can post or send on to rwang0 at gmail dot com or r at softwareinsider dot org and we’ll keep your anonymity.

Please let us know if you need help with your next gen apps strategy efforts. Here’s how we can help:

- Providing contract negotiations and software licensing support

- Evaluating SaaS/Cloud options

- Assessing apps strategies (e.g. single instance, two-tier ERP, upgrade, custom dev, packaged deployments”

- Designing end to end processes and systems

- Comparing SaaS/Cloud integration strategies

- Assisting with legacy ERP migration

- Engaging in an SCRM strategy

- Planning upgrades and migration

- Performing vendor selection

Reprints

Reprints can be purchased through the Software Insider brand. To request official reprints in PDF format, please contact [email protected].

Disclosure

Although we work closely with many mega software vendors, we want you to trust us. For the full disclosure policy please refer here.

Copyright © 2010 R Wang and Insider Associates, LLC. All rights reserved.

R "Ray" Wang

R "Ray" Wang R "Ray" Wang

R "Ray" Wang