Product Review: Informatica Addresses The Impending Big Data Challenge With Release 9.1

Big Data Emerges As A Challenge In A World Of Unstructured Data Proliferation

Data volumes continue to explode with a proliferation of devices, social media tools, video usage, and emerging forms of both structured and unstructured data. The rate of data explosion may be occurring faster than Moore's Law. Organizations now face a significant challenge in dealing with this data deluge. Across the 5 pillars of consumer tech effecting enterprise software, organizations must deal with:

- Managing unstructured user generated social interaction data. Massive smartphone adoption and social network usage will converge to create massive data volumes. Twitter now has 106 million users generating over 3 billion requests per day. Most analysts firms forecast at least 300 million smart phones in use among the 1.6 billion mobile devices sold in 2010. Sensing data, call detail records, location based information, digital media, and other sources will lead the individual data explosion.

- Coping with explosion in transactional data volumes. A collusion of compliance, regulatory, and digitalization leads to exponential increase in transactional data. Audit and compliance requirements lead to increase in security log files, network and system event logs, emails, and searchable messaging communciations. Add significant automation of business processes and Constellation Research estimates that annual growth in online transactional data and repositories will grow 66%. Most data centers now commit 25% of their infrastructure spend to support storage for data growth.

Informatica 9.1 Focuses On Big Data

Announced June 6th, 2011, Informatica 9.1 is generally available. The new release focuses on four key themes that address the Big Data issue:

- Delivering a near open data integration platform. The new release supports Hadoop, big transactional data, and big interaction data. Hadoop support includes connectivity to the file system, HDFS and MapReduce for big data processing. Big transactional data features support EMC Greenplum and other DW appliance vendors soon, in addition to existing Oracle, IBM DB2, IBM Netezza and Teradata connectivity. Big interaction data connectivity support for the Big 3: Facebook, Twitter and LinkedIn.

Point of View (POV): Hadoop provides low cost processing and storage platforms required to address the big data issue. While Informatica 9.1 is designed for a mind boggling petabyte connectivity to OLAP and OLTP data stores today, power users will push for exabyte scale in 12 to 18 months. The new release also delivers a complementary relational/data warehouse appliance package. For social data, organizations will improve their ability to correlate social media signals with transactional data to deliver new insights across the organization. Expect Hadoop and social media connectors to be delivered later in June 2011. - Incorporating master data management technologies with Big Data. The new release incorporates key assets from the Siperian Master Data Management (MDM) acquisition. Users gain new multi-style and multi-domain MDM approaches. Data governance is addressed via resusable data quality policies while proactive data quality builds on Informatica's complex event processing technology to identify and alert users on data quality exceptions.

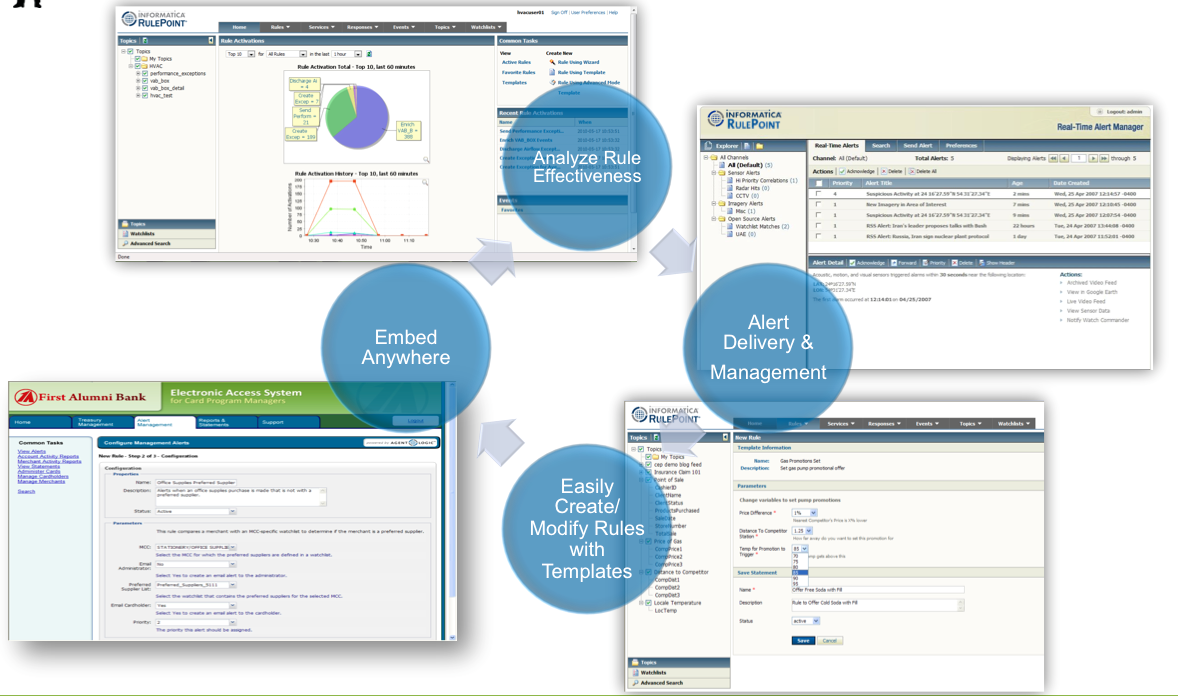

POV: Informatica's MDM offering remains among the top in shortlists at Constellation Research. The solution delivers true multi-style, multi-domain, multi-deployment, and multi-use capabilities on one technology platform. Users gain the ability to manage data quality rules in source applications that not only propagate downstream, but also take advantage of complex event processing (CEP) to provide proactive alerting (see Figure 1). Informatica's Rule Point CEP engine also provides key geo-aware processing capabilities for advanced scenarios.

Figure 1. Informatica's Self Service Proactive Monitoring

Source: (Informatica)

- Self-service empower all users to obtain relevant information while IT remains in control. Self service takes a role based design that addresses the needs of business users, project owners, IT analysts, developers, and data stewards. Users gain more capabilities to access data controls, define rules, and adjust parameters. Project owners can source business entities within applications without having to understand schemas and data models.

POV: Informatica's design point traditionally focuses on power users. The shift to more self-service capabilities provides a good start to addressing the needs of tech savvy business users and reducing the overall cost of ownership.

- Adaptive data services provide critical information governance capabilities. Centrally managed processes include multi-protocol data provisioning for data virtualization, integrated data quality for data governance, and policy-driven enforcement for data governance. Organizations will gain the ability to quickly provision data services through ODBC or JDBC, as a web service, or to PowerCenter. Read and write data quality, data quality templates and data quality rules are delivered out of the box.

POV: The information governance problem mirrors the data deluge problem. Disparate tools and disparate governance processes afflict every enterprise. Adaptive data services bring order to a chaotic array of protocols and policies.

The Bottom Line: Organizations Can Apply Solutions Such As Informatica 9.1 To Master The Information Supply Chain

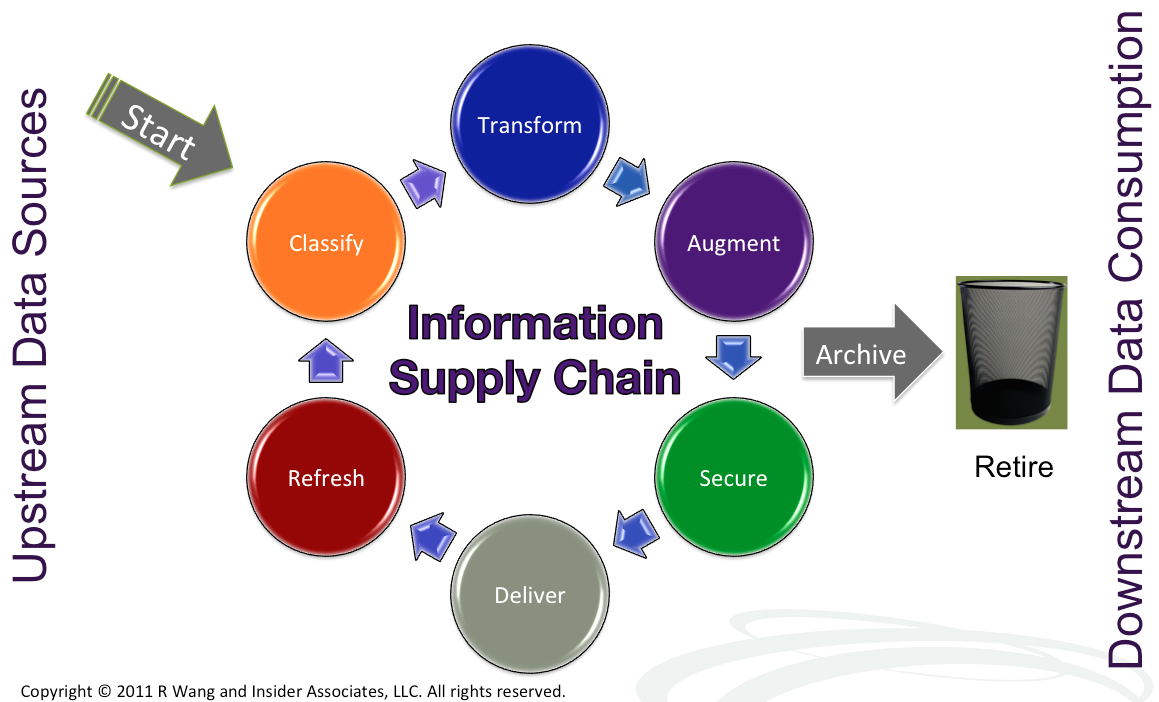

The technology solutions to address complex information management processes often reside in a disparate collection of best of breed apps. With the big data issue looming, organizations must move beyond a technology decision. More than just dealing with an explosion of data, organizations must support business processes that rely on the information supply chain. Eight key areas of the information supply chain include:

Source: Insider Associates, LLC

- Classify. Classification schemes tie relationships back to structured and unstructured data. Classifications could include subjects, location, individuals, organizations, relationships, and other metadata.

- Transform. Data from multiple source systems require transformation into a compatible format for the destination system. Techniques include translating coded values, sorting, joining, transposing, splitting, disaggregation, etc. Maintaining source system lineage enables the ability to revert or undo changes.

- Augment. Augmentation enables users to provide additional information to the data. Examples include third party data from commercial sources, government agencies, and market research firms.

- Secure. Data privacy and security should map back to existing policies. In some cases, data should be masked or encrypted. Simple security could include classifications for public, private, and restricted. Most systems will map back to role based security systems.

- Deliver. Delivery should include both automated and manual techniques. Subscribing systems could trigger requests based on rules and policies in complex event processing engines or simple thresholds. Manual delivery mechanisms should log back to interaction engines.

- Refresh. The half life of cleansed data can range as little as 10 seconds for location based status updates to 3 months for addresses for a transient college student. Information supply chains must continually refresh information to stay relevant.

- Archive. Unused data can drive down performance times, create regulatory compliance nightmares, and expose legal risks. By moving inactive data from production systems to backups, organizations can leave important and necessary data indexed and accessible without stymying existing systems.

- Retire. Organizations can rid themselves of older data that is no longer required for compliance or is irrelevant. Data can be encrypted and stored offsite or even hard erased.

Organizations that address the challenges of big data will gain significant strategic advantages in better analytical insight, right time engagement, and scalable operational efficiencies.

Your POV.

Will you make the move to address Big Data with Informatica 9.1? Will you consider other options? What will drive you to go with one platform? Add your comments to the blog or send us a comment at r (at) softwareinsider (dot) org or info (at) ConstellationRG (dot) com.

Let us know how we can assist with:

- Building a Cloud Strategy

- Designing your apps strategy

- Crafting a social business strategy

- Short list vendors

- Negotiate your software contract

Related Links

20110607 Information Week - Doug Henschen "Big Data: Informatica Tackles The High-Velocity Problem"

20110605 IDG News Service – Chris Kanaracus “Informatica Adds Support for 'big Data,' Hadoop”

Related Resources

2011o509 Monday’s Musings: Using MDM To Build A Complete Customer View In A Social Era

20090831 Monday’s Musings: Why Every Social CRM Initiative Needs An MDM Backbone

20110102 Research Summary: Software Insider’s Top 25 Posts For 2010

20101216 Best Practices: Five Simple Rules For Social Business

20110104 Research Report: Constellation’s Research Outlook For 2011

20101004 Research Report: How The Five Pillars Of Consumer Tech Influence Enterprise Innovation

Reprints

Reprints can be purchased through Constellation Research, Inc. To request official reprints in PDF format, please contact [email protected].

Disclosure

Although we work closely with many mega software vendors, we want you to trust us. For the full disclosure policy, stay tuned for the full client list on the Constellation Research website.

Copyright © 2011 R Wang and Insider Associates, LLC All rights reserved.

R "Ray" Wang

R "Ray" Wang R "Ray" Wang

R "Ray" Wang