Monday's Musings: Beyond The Three V's of Big Data - Viscosity and Virality

Revisiting the Three V's of Big Data

It's time to revisit that original post from July 4th, 2011 post on the the Three V's of big data. Here's the recap:

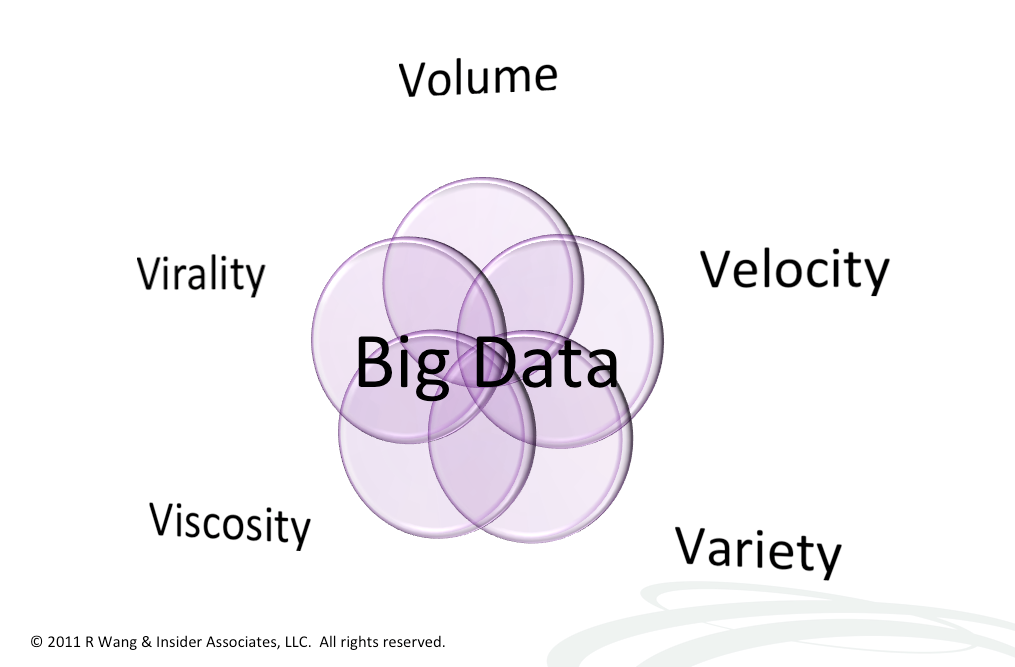

Traditionally, big data describes data that's too large for existing systems to process. Over the past three years, experts and gurus in the space have added additional characteristics to define big data. As big data enters the mainstream language, it's time to revisit the definition (see Figure 1.)

- Volume. This original characteristic describes the relative size of data to the processing capability. Today a large number may be 10 terabytes. In 12 months 50 terabytes may constitute big data if we follow Moore's Law. Overcoming the volume issue requires technologies that store vast amounts of data in a scalable fashion and provide distributed approaches to querying or finding that data. Two options exist today: Apache Hadoop based solutions and massively parallel processing databases such as CalPont, EMC GreenPlum, EXASOL, HP Vertica, IBM Netezza, Kognitio, ParAccel, and Teradata Kickfire

- Velocity. Velocity describes the frequency at which data is generated, captured, and shared. The growth in sensor data from devices, and web based click stream analysis now create requirements for greater real-time use cases. The velocity of large data streams power the ability to parse text, detect sentiment, and identify new patterns. Real-time offers in a world of engagement, require fast matching and immediate feedback loops so promotions align with geo location data, customer purchase history, and current sentiment. Key technologies that address velocity include streaming processing and complex event processing. NoSQL databases are used when relational approaches no longer make sense. In addition, the use of in-memory data bases (IMDB), columnar databases, and key value stores help improve retrieval of pre-calculated data.

- Variety. A proliferation of data types from social, machine to machine, and mobile sources add new data types to traditional transactional data. Data no longer fits into neat, easy to consume structures. New types include content, geo-spatial, hardware data points, location based, log data, machine data, metrics, mobile, physical data points, process, RFID’s, search, sentiment, streaming data, social, text, and web. The addition of unstructured data such as speech, text, and language increasingly complicate the ability to categorize data. Some technologies that deal with unstructured data include data mining, text analytics, and noisy text analytics.

Figure 1. The Three V's of Big Data

Contextual Scenarios Require Two More V's

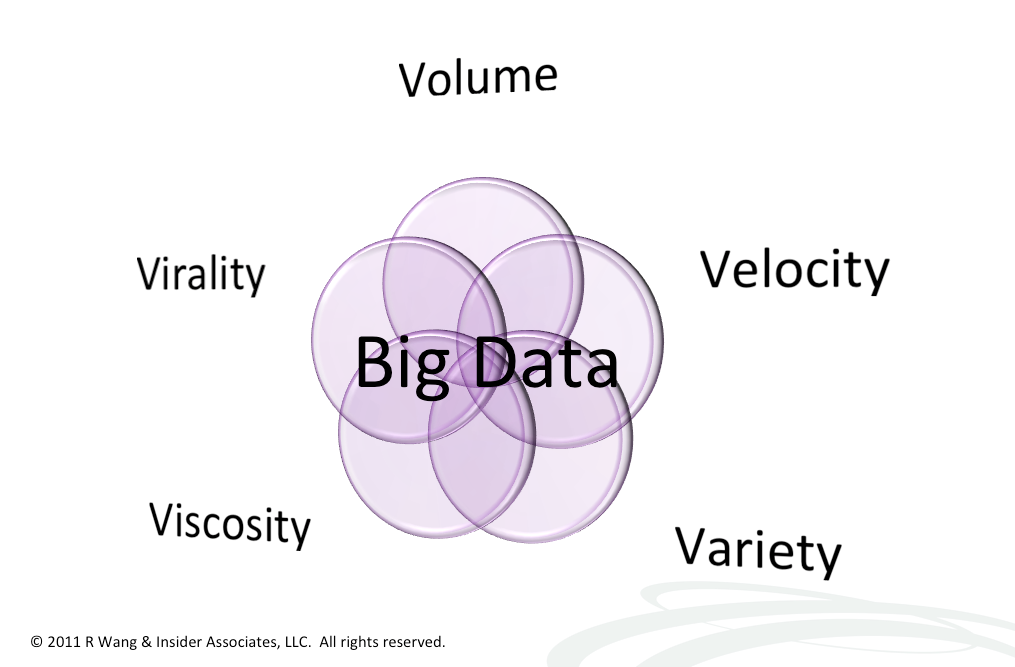

In an age where we shift from transactions to engagement and then to experience, the forces of social, mobile, cloud, and unified communications add two more big data characteristics that should be considered when seeking insights. These characteristics highlight the importance and complexity required to solve context in big data.

- Viscosity - Viscosity measures the resistance to flow in the volume of data. This resistance can come from different data sources, friction from integration flow rates, and processing required to turn the data into insight. Technologies to deal with viscosity include improved streaming, agile integration bus', and complex event processing.

- Virality - Virality describes how quickly information gets dispersed across people to people (P2P) networks. Virality measures how quickly data is spread and shared to each unique node. Time is a determinant factor along with rate of spread.

Figure 2. The Five V's of Big Data

The Bottom Line: Big Data Provides The Key Element In Moving From Real Time To Right Time

Context represents the next frontier as we move to intelligent systems. Big data systems and techniques will provide the key infrastructure in delivering context within business processes, across relationships, by geo spatial position, and within a time spectrum. As engagement systems make the shift to experiential systems, expect context to provide the key filter in improving signal to noise ratios. Big data provides the context required to move from real time to right time.

Catch Constellation's Big Data Coverage From VP and Principal Analyst - Neil Raden

Upcoming Report: Analytics in the Organization: Types, Roles and Skills

“Analytics” is a critical component of enterprise architecture capabilities, though most organizations have only recently begun to develop experience using quantitative methods. This report discusses the role of analytics, why it is a difficult topic for many, and what actions you should take. It lays out the various meanings of analytics, provide a framework for aligning various types of analytics with associated roles and skill sets needed.

Blog Post: What Is a Data Scientist (and What Isn't)

Big Data doesn't happen by itself. Because the tools and techniques are different from traditional Data Warehousing/Business Intelligence approaches, big Data requires different skills. This role has become known as the Data Scientist. Have a look at analyst Neil Raden's take on the data scientist.

Watch for the following:

- Here is all my stuff: Select what you like:

- Understanding Data: Mechanical MDM, Ontology, Machine Learing

- Future of IBM's Watson

- Tainted Truth: How to Read Statistical Research

- noSql: The End of the Relational Database

- Analytical Platforms: Revenge of the Relatioal Database

- Next Wave of BI

- The Data Scientist

- Planning and Performance Management Supercharged with ANalytics

- Hadoop vs. ETL vs. ELT

- CEP: From Product Class to Wider Application

- Real-Time Decision-Making: Where It Fits

- Are Rules-Based Management Systems Dead?

- Skills Checklist for Big Data

- Skills Checklist for Business Analytics

- Interactive Data Visualization

- Let the Gorillas Write the Script: Forget Requirements

- Data Warehouse Rescue: What to Do with your Legacy Warehouse

- BI Rescue: What to Do with your Legacy BI

Your POV

What business problem will require you to start with Big Data? What are the key outcomes? Where do you expect to move the needle? Add your comments to the blog or send us a comment at R (at) SoftwareInsider (dot) org or R (at) ConstellationRG (dot) com

Resources

- Monday's Musings: The Three V's of Big Data

- Research Report: Rethink Your Next Generation Business Intelligence Strategy

- Monday’s Musings: Balancing The Six S’s In Consumerization Of IT

- Monday’s Musings: A Working Vendor Landscape For Social Business

- Research Report: The Upcoming Battle For The Largest Share Of The Technology Budget Part 1

- Research Report: How The Five Pillars Of Consumer Tech Influence Enterprise Innovation

- Best Practices: Five Simple Rules For Social Business

Reprints

Reprints can be purchased through Constellation Research, Inc. To request official reprints in PDF format, please contact Sales .

Disclosure

Although we work closely with many mega software vendors, we want you to trust us. For the full disclosure policy, stay tuned for the full client list on the Constellation Research website.

* Not responsible for any factual errors or omissions. However, happy to correct any errors upon email receipt.

Copyright © 2001 -2012 R Wang and Insider Associates, LLC All rights reserved.

Contact the Sales team to purchase this report on a a la carte basis or join the Constellation Customer Experience!

R "Ray" Wang

R "Ray" Wang R "Ray" Wang

R "Ray" Wang